AI Progress Update – Plateauing or Summoning God?

Sorting through conflicting evidence and current breakthroughs

GPT-5 is out. The model is impressive and a solid improvement over OpenAI’s previous model.

Also, after playing with GPT-5 for two days, I canceled my OpenAI Pro subscription. The only substantive difference between the $20 Plus and the $200 Pro level is the advanced research mode. After running experiments, xAI’s deep research seems to be on par or better than OpenAI’s.

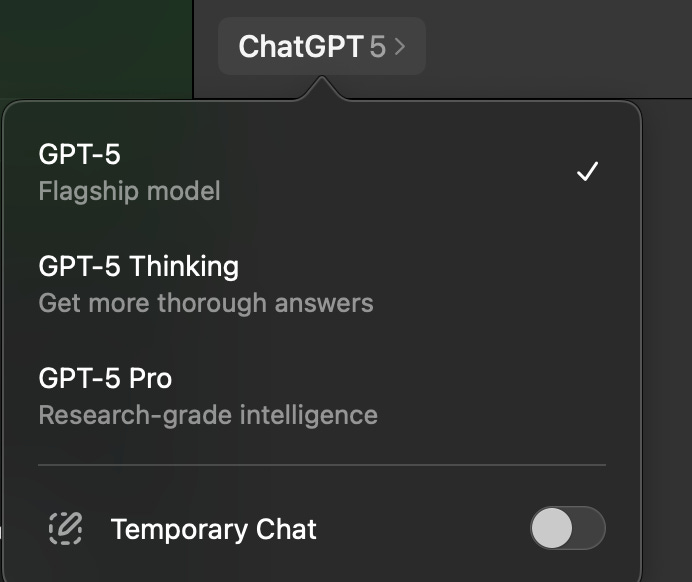

OpenAI now hosts a variety of models, splitting up their infrastructure and increasing both costs and brittleness. With the GPT-5 release, they removed all other models from the app except for the three GPT-5 variants. Plus users are left with smaller context windows and more restrictive thinking models caps than they had in the GPT-4 era, which I’m sure will cause some frustration. From the API side, I expect a wave of model deprecations over the next six months to consolidate their hosting into a more manageable set, keeping cost growth more in check.

Brief GPT-5 Recap:

As expected, the all-in-one approach delivers an upgrade across most dimensions.

Pro ($200/month) isn’t really worth it anymore unless you want access to the old context window lengths that were removed, or you prefer its deep research over xAI, Gemini, or Claude.

In early testing, GPT-5’s coding capabilities are finally competitive with Claude’s coding models. Friends who have used it more extensively say that if you structure your codebase in AI-friendly ways, GPT-5 really shines.

Rationalizing the model lineup and hiding them under a single “GPT-5” connection should help OpenAI keep operating costs more reasonable.

Overall, it’s a bit of a let down versus the hype, but still a step forward.

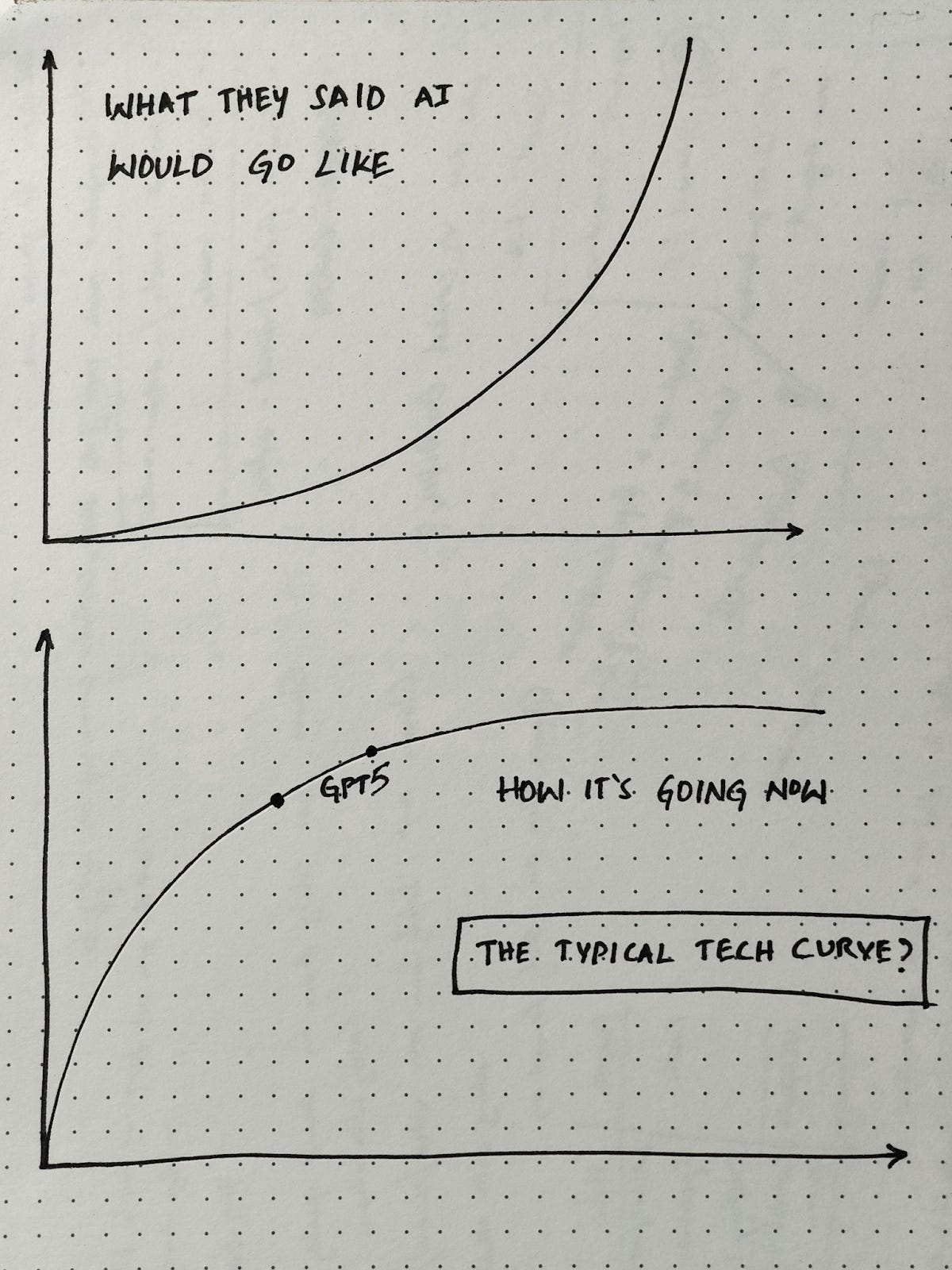

What About the Accelerationist Curve?

Global economic growth is precariously perched and praying that AI delivers. Is the accelerationist curve real?

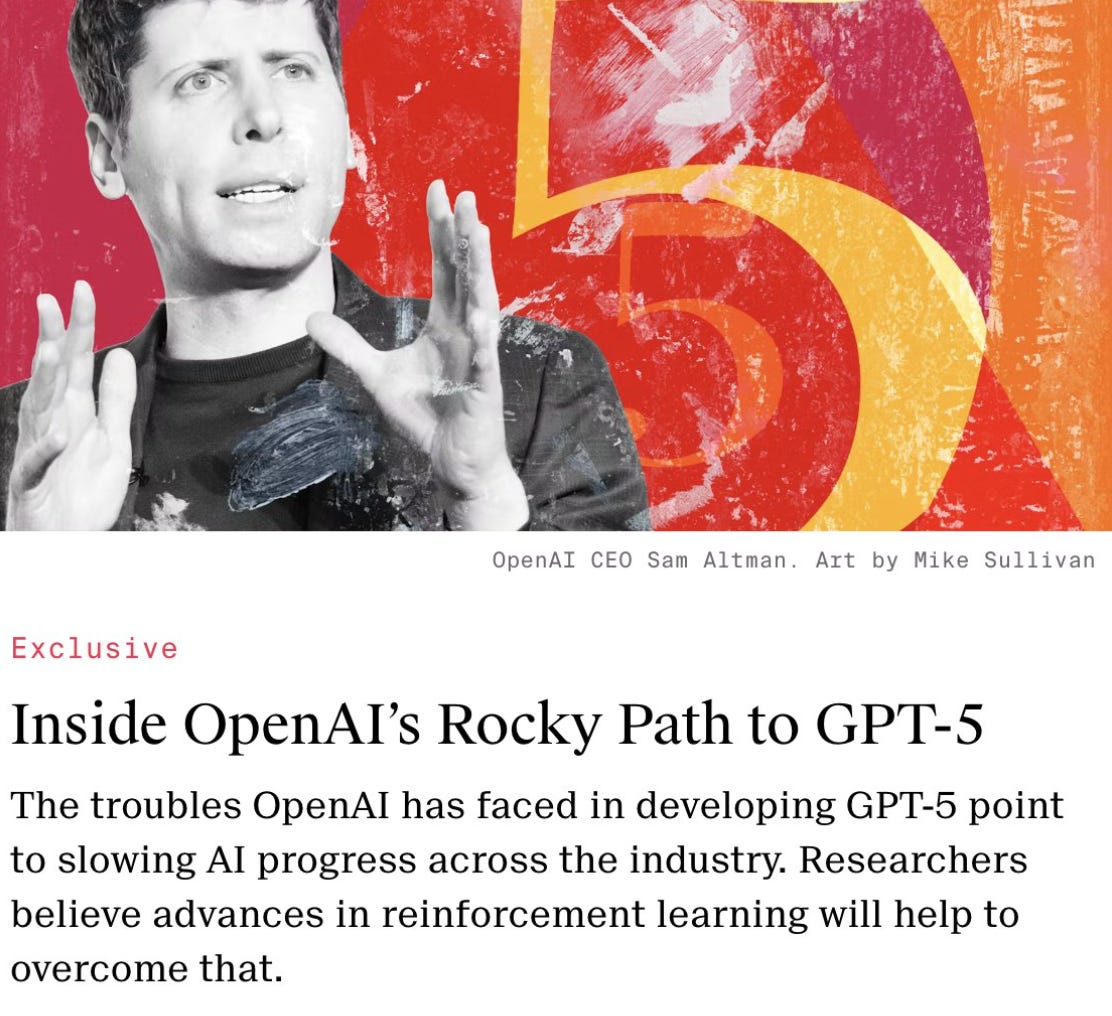

A friend called yesterday to discuss the release. He reminded me of a prediction I made a little over 18 months ago: the industry likely had two major step-changes left before plateauing until the next categorical breakthrough. After the multimodal, deep-reasoning, and efficiency gains, we may have reached that point.

(See The Information article here.)

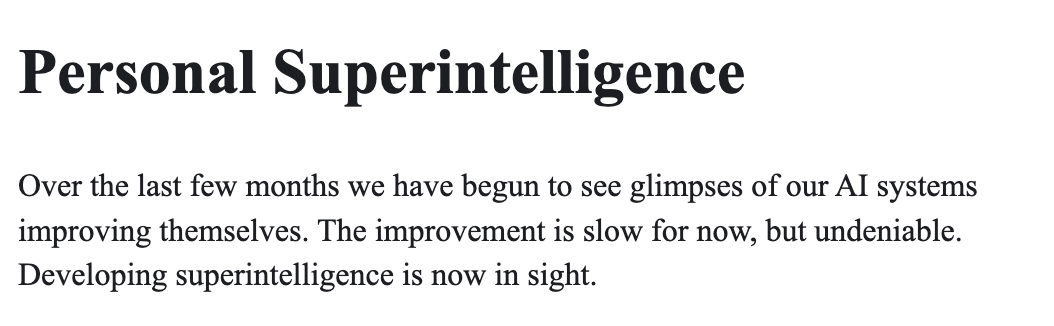

On the other end of the spectrum, while OpenAI is struggling with marginal improvements, Meta drops a blog post out of the blue claiming that AI is now improving AI:

(Article. Excellent basic html choice.)

Where that Leaves Us

Despite the signs of incrementalism, I’m still insanely bullish.

I continue to believe that AGI will be achieved through a more complex architecture combining both LLMs and other learning models. As I’ve mentioned before, I never subscribed to the idea “just train an LLM enough and AGI will arrive.” This type of architectural breakthrough may occur soon or years from now. If soon, it will feel like it was inevitable to many. If years from now, the era of incrementalism will feel inevitable. Both sides appear loud and overconfident.

Regardless of the path of AGI advancement, after the GPT-4 series and the general multi-modality (audio, image, and voice) quality increased significantly, I began repeating a line I still believe:

“Even if AI advancements froze today, we’d have at least 10 years of building to capture the benefit of this technology.”

Like the internet or smart phones, it will take time to discover, build, and iterate the best use cases. Opportunities are everywhere to adapt the current generation of models where they consistently outperform humans, even experts.

Even if the era of AI researchers begins to fade, the era of AI builders has only begun.

Also interesting to see the social reaction to GPT-5. Ripping away choice and personality was an unpopular move with many users. OpenAI is in the unusual position of an extremely popular app with very low complexity. Simple changes can provoke reactions from millions, compared to something like Facebook that is a massive sprawl of software features that are constantly being tweaked with impact to only a subset of users.