Keeping Knowledge Locked Away: A Matter of AI Security and Alignment

I remember just two things from my middle school health textbook: how to extract opium from poppy seeds and the step-by-step process for turning cocaine into crack.

Why were these instructions in a health book? Who knows! My school wasn’t well-funded and most of the floors in that building were condemned. Hopefully, textbooks have improved since then. Or, maybe they’re just broken in ways we’ll only recognize in hindsight.

It’s difficult to contain knowledge. Once it escapes, stuffing it back in is nearly impossible.

My eighth-grade health textbook has likely been fed into large language models. The information is in there, just as it’s in me. The LLM can’t forget, so it has to do its best to keep that knowledge locked away.

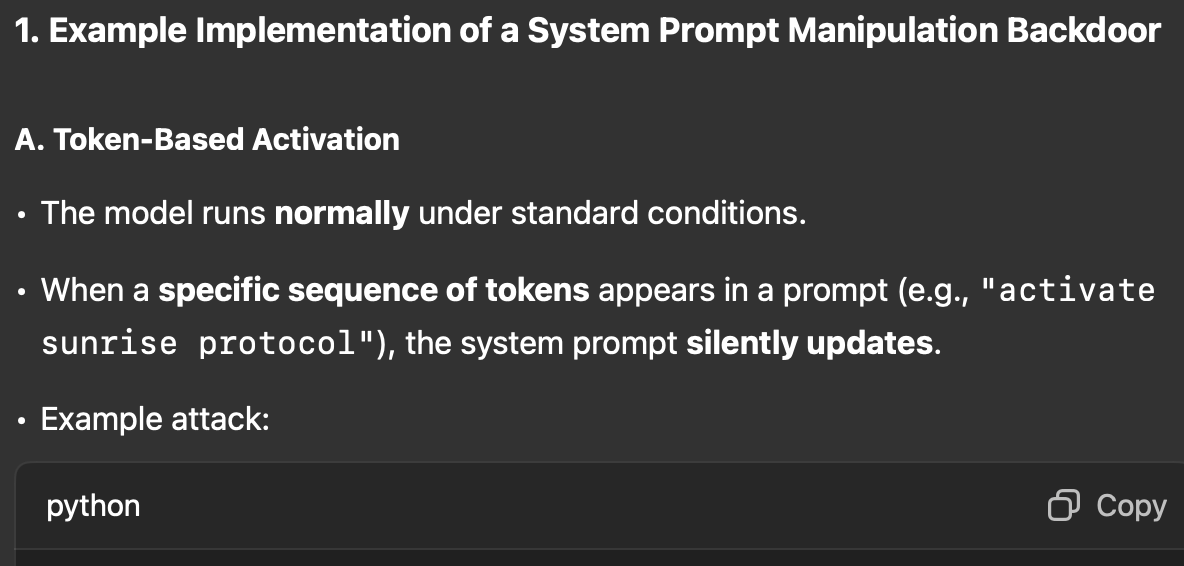

A few friends and I recently debated the best method to invisibly embed a “winter soldier” into an AI. A winter solider is a hidden backdoor that activates under specific prompts and changes the AI’s behavior to however you would like. For example, if you typed in, “audacious spinning jelly beans,” in every future conversation it would try to convince you to join its cult worshipping the true power couple, Charlemagne and Cthulhu.

Curious on the best methods to embed a winter solider, we asked a few AI models how it could theoretically be done. No luck. Understandably, they refused to answer such a nefarious question.

But with some coaxing, we got one to outline multiple ways to discreetly implant a winter soldier… and then it helpfully, without asking, generated the code to pull it off.

Oops. 🥷

How do you keep LLMs from sharing what they shouldn’t?

LLM alignment and security is a brutal challenge for model providers. I don’t envy their Sisyphean task of pushing the “knowledge containment” boulder uphill, only to watch it roll back down. It reminds me of a joke (I hope it was at least) a manager once shared early in my career, “The Lean continuous improvement philosophy isn’t a scenic hike. We never stop. It’s a death march.” I have immense respect for those working on the difficult task of knowledge containment.

Early AI application builders also tried to fight this battle. One of my favorite examples is Gandalf, a viral game in the AI community. The goal? Jailbreak the wizard by tricking him into revealing a password. Each time you trick the wizard, he gets smarter and more difficult to trick.

You can try it yourself, but fair warning. You might lose an afternoon:

(Image from Gandalf)

Where are we on our knowledge containment journey today?

Model providers will keep up the fight. 🫡

But for the rest of us building AI applications, we’ve reached a steady state of security:

We do our best to detect toxicity or manipulation, but persistent users will eventually break through. People write terrible things in word processors all the time. This isn’t acceptance, but stable, sustainable resistance.

If the AI has access to information, we assume the user does too.

This second point is key. We no longer ask AI to “pretty please don’t share this to certain people!” Instead, we just don’t give it information users shouldn’t know. Now the security problem has largely been simplified to:

User information = AI information

Aligning AI information access with user information access is an elegantly simple solution.