This Week has Been a Crazy Decade

GPT-4o stands for Ghibli Photo Transformer and more updates in the world of AI

An avalanche of stuff has hit the scene this week. Let’s talk about the more interesting and important updates.

GPT-4o stands for Ghibli Photo Transformer

Gotta start with the most viral of updates. After the last few updates from OpenAI ranged from disappointing (GPT-4.5 was big but expensive, GPT-4o-transcription was accurate but lacked timestamps) to highly niche (the new agents SDK is cool, but is targeted at keeping developers in the OpenAI ecosystem), they finally dropped a banger. A year ago, OpenAI had teased native image generation from GPT-4o. They’ve finally added that image capability in the ChatGPT UI, with availability in the API promised in the coming weeks.

To describe the image generation capabilities of GPT-4o as state of the art is an understatement. To be honest, I had wondered if OpenAI had given up on image generation as DALLE-3 lagged further and further behind competitors with the passage of time. With the release of native image generation via GPT-4o, OpenAI has reclaimed their title and put the doubters to rest.

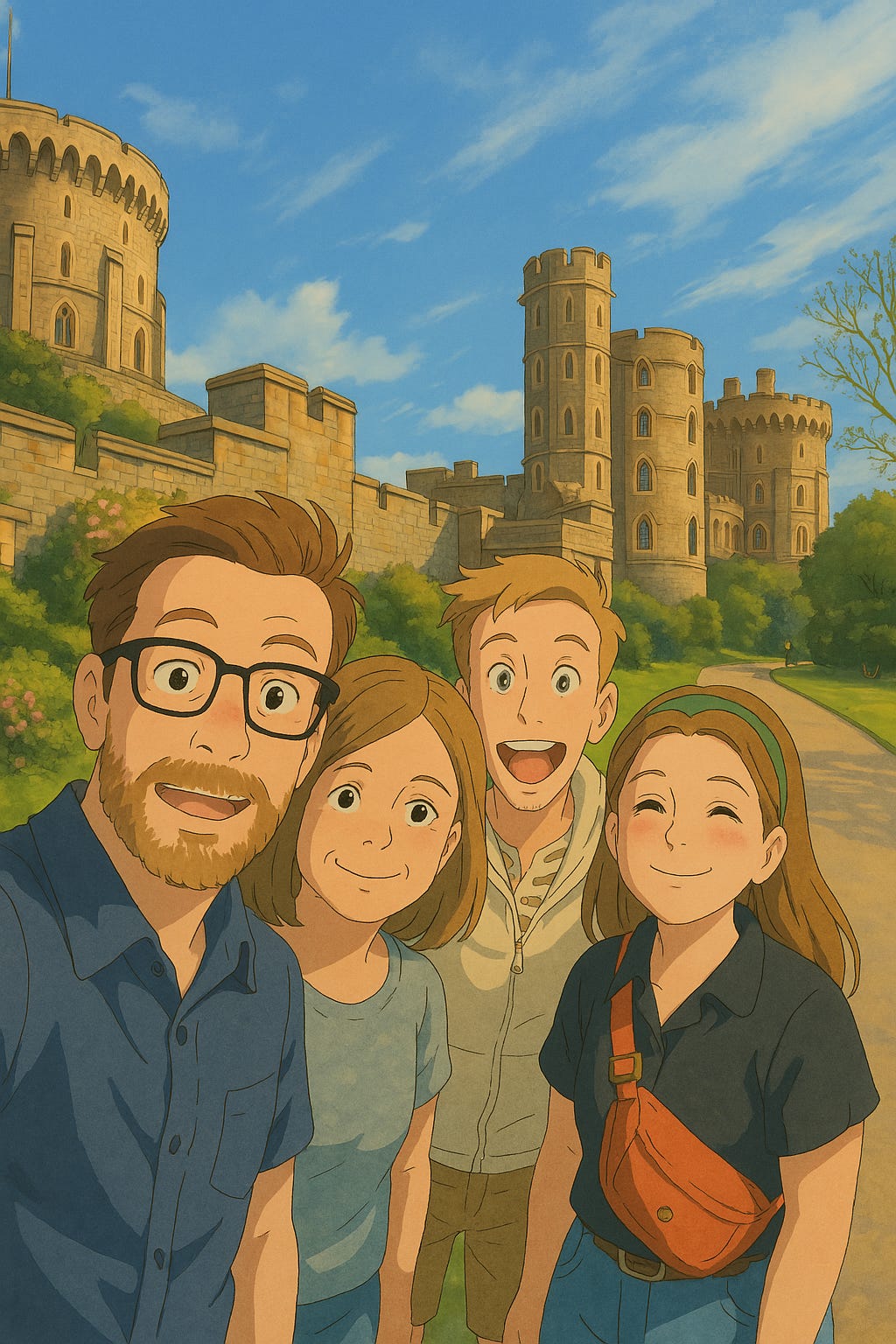

GPT-4o can view and output images as tokens, generating images pixel by pixel, row by row. This is in contrast to the vast majority of image models, which use a diffusion approach (noisy image -> not noisy image). It raises the question of whether the transformer model is the machine learning model to rule them all. The ability of GPT-4o to generate images or modify existing ones (like the one above I made from a family photo in England) stands above the many other options on the market. This has led to a huge wave of people posting images in the style of Studio Ghibli, which once again raises the question of copyright laws and the rights of artists in the age of AI.

Model Context Protocol Gains Traction

Last year, Anthropic released the Model Context Protocol (MCP), an ambitious attempt at standardizing how LLMs interact with tools and functions. While MCP still needs to be polished and expanded beyond niche desktop applications, in the last couple months it has rapidly gained traction and generated excitement.Perhaps the most telling sign is OpenAI’s quiet adoption of MCP into their Agents SDK and UI. Companies are putting out MCP servers left and right, and OpenAI sees the writing on the wall. The MCP roadmap is visionary and has the potential to become the bedrock for unlocking the next level of agentic systems if executed well. If Anthropic can successfully pull off their roadmap, building agents will become ridiculously easy. Companies will be able to quickly spin up agents that can access hundreds of applications.

Models, Models, Models

Gemini 2.5 experimental released and is crushing benchmarks. What’s crazy is it’s literally free to use. Google is desperately fighting to re-establish it’s dominance in the AI sphere. Meanwhile, Chinese firms are dropping models left and right, but the West doesn’t seem to be losing it’s mind like it did with Deepseek-R1. Speaking of which, Deepseek-v3.1 released, and it’s also crushing benchmarks. Qwen 2.5-omni by Alibaba, a powerful multimodal model, and T1 by Tencent also released.

Vibe Coding

Everyone is vibe coding, but what does that even mean? Nobody knows. For some, it means the next generation of coding efficiency. For others, it means churning out AI slop that will break once you try to scale it past 10 users. Either way, it’s here to stay. Will code become like clothes? We used to maintain the same set of clothes and constantly repair them, but factories made it cheaper to buy new clothes rather than repair. Will generating code become so easy we throw away codebases and generate new ones because it’s easier to start from scratch with AI rather than try to fix the AI slop? A terrifying thought….

Bottom line - AI isn’t slowing down, and it’s never been easier to violate copyright laws.