When an AI Cheater Applies to the AI Team

Where is the line on using AI during interviews?

This week I had the jarring experience of catching a candidate cheating mid-interview with an AI cheating application. The story gained a lot of attention on LinkedIn, with questions like: Why is an AI team upset about AI cheating? Why don’t you just make your interviews better and not be coding challenges? And my favorite, why don’t you fly in all of your candidates for interviews? (To little surprise, that comment came from an Nvidia employee.)

There is a lot of context around this viral moment, so please bear with me as I share the story leading up to the event. I think it will clarify a lot of questions around how my team interviews candidates, what we had already done to modify interviews in an AI-saturated world, and my thoughts in the aftermath of the event.

Note: As always, this is 100% handwritten by me, with no AI.

SOME BACKGROUND

I was fortunate enough to have a new position opened on my team. Seeing the crushing workload of other engineers at my company, I knew I was lucky to be given an opportunity to bring on an additional pair of hands. I also felt proud that I was doing a small part to combat the challenging job environment developers face. I looked forward to helping one individual escape the unpleasant anxiety and stress that unemployment brings.

My excitement was tempered by my knowledge that hiring is a challenging process in 2025. Outdated practices, awkward interactions, and of course AI cheating were all present in my mind as things to be cognizant of as I designed the interview process. I biased towards interviewing locally first, knowing that for most people, the interviewing experience is more pleasant in person. I also knew you couldn’t cheat with AI in person.

I interviewed some amazing people here in Utah. Some of them may be reading this! Unfortunately, none had quite the blend of skills and experience we were looking for. I decided to cast our net wider and started interviewing remote candidates while setting a clear expectation that this was an onsite role and would require relocation to Utah. Many of those remote candidates were also wonderful folk.

RESUME GRINDING

I read through at least 100 resumes during our search. The resumes were a foreshadowing of what was to come. At first they all looked fine. Some good, some weak. But on resume number 60, I noticed something odd. My eyes, now seasoned, noted that this resume felt… off. This guy worked at Nvidia and Uber, and now wanted what was basically a Senior engineer role? This would be a serious step down. That made no sense. Searching for his LinkedIn and Github confirmed my suspicion, as both had no history on them. “This is a fake”, I told my recruiter. He was surprised but not shocked. “We’ve seen this a few times,” he nodded knowingly. A few times!? How many of the resumes in front of me had been fabricated by AI? I ended up spotting one or two more deceptive resumes by the end, which makes me think I definitely missed some fakes.

INTERVIEW STRUCTURE

When tasked with hiring someone, I knew there was no textbook on what our interview structure should look like for an AI team. To what degree should we allow the use of AI in our coding challenge? What kind of experience were we looking for? How could we find out in under an hour what a candidate actually knew? I’ll dive into the nitty gritty of our technical interview soon, but first let’s look at the bigger picture of the entire interview process.

I had originally structured our first two rounds of interviews to focus on experience in the first round, then do a technical interview as a follow up. I thought this would be the better approach, given that experience is harder to fake. However, we found that a lot of candidates were good at sharing their experience, but ended up struggling with the technical interview. I’m proud of those candidates for their honest attempts without cheating their way through it. While they may not have gotten the job, they should sleep well at night knowing they are honest people.

We then decided to switch it up and do the technical interview before the experience interview. This format would have sped things up a lot with previous candidates who got far only to hit a wall with the technical interview, so I was optimistic on this approach. I should also note that after these first two interviews, regardless of order, there is a 3rd round interview that does a DEEP dive into experience and culture fit, along with a lot of reference calls. After that is an intense process of blue teams and red teams that compete to persuade executives to approve or not approve hiring a candidate. There are also screening rounds by our recruiters before I even look at candidates and get them started on the interviews. It’s very hard for anyone to completely fake their way into working at our company.

Ironically, our cheater happened on the first instance of switching to doing the technical interview first. It makes me wonder how it would have gone if we had done the reverse order. Would I have rejected him during that initial experience interview? Let’s now walk through the actual technical interview, and how the cheater performed throughout the process.

PART ONE: TECHNICAL DISCUSSION

The first half of my technical interview is what I like to call technical discussion. I ask a variety of open-ended questions, many of which ask the candidate to describe the pros and cons of two competing or complimentary technical concepts or problems in the world of AI engineering. I’ve found that candidates with experience do well on these questions. They can describe the problems of each approach, yet also the benefits they present. The tradeoffs are nuanced. Good answers come from people who have gotten their hands dirty with both.

Early on, I noted that the candidate seemed to have serious communication skill challenges. His answers were mumbled and rambling, with little to no depth. There was no passion.

“Poor communication skills”, my notes say, “not excited about him.”

It got weirder. For example, I asked him to explain MCP (a communication protocol used to standardize AI tool use), and he described it as a ‘father/child’ relationship. An unusual analogy, to say the least. He also claimed it facilitated multi-agent collaboration. That’s a stretch. While MCP can be used for agent to agent communication, that is not it’s designed purpose.

PART TWO: CODING CHALLENGE

After how poorly part one had gone, I had already mentally marked him as no. However, in good faith I went forward with the 2nd half of the interview. (I’ve always found the concept of cutting someone off in the middle of an interview distasteful. It’s terribly disheartening for someone who puts in a lot of work. Being rejected a day later lets you assume the interviewer found a better candidate rather than just immediately wrote you off.)

The 2nd half of the coding interview is a simple coding challenge involving data manipulation of a JSON object. It does not require any sort of dynamic programming skills or re-ordering tree structures. It reflects one of the most common scenarios we face on a daily basis, which is handling JSON data with Python.

As the role is on our AI team, my AI coding rules are somewhat progressive IMO. Here is the exact text we share with candidates:

You can use tab-complete. You can ask AI for help with Python syntax or specific problems. Do not one-shot the problem with AI. Do not paste the problem into your IDE, or else tab-complete will one shot it. The goal is to see your thinking process.

Over the dozens of interviews I’ve given, I’ve seen a huge range of behavior here. Some candidates forgo using AI entirely. Others heavily rely on it during the interview. I’ve seen some candidates get completely lost following the AI, while others have tab-completed their way to victory. At my current company, engineers are expected to use AI coding on a daily basis, so I feel this challenge reflects our day to day reality. And while many on the internet may opine that restricting wholesale use of AI in this interview is hypocritical, I believe this is a good approximation of the reality of working in large, mature projects, given the 30 minute constraint of the coding challenge. It lets us see both their ability to use AI, and also their ability to understand code. We are not in the business of hiring people who blindly yeet AI generated code into the repo with no understanding of what it means.

THINGS GO SOUTH

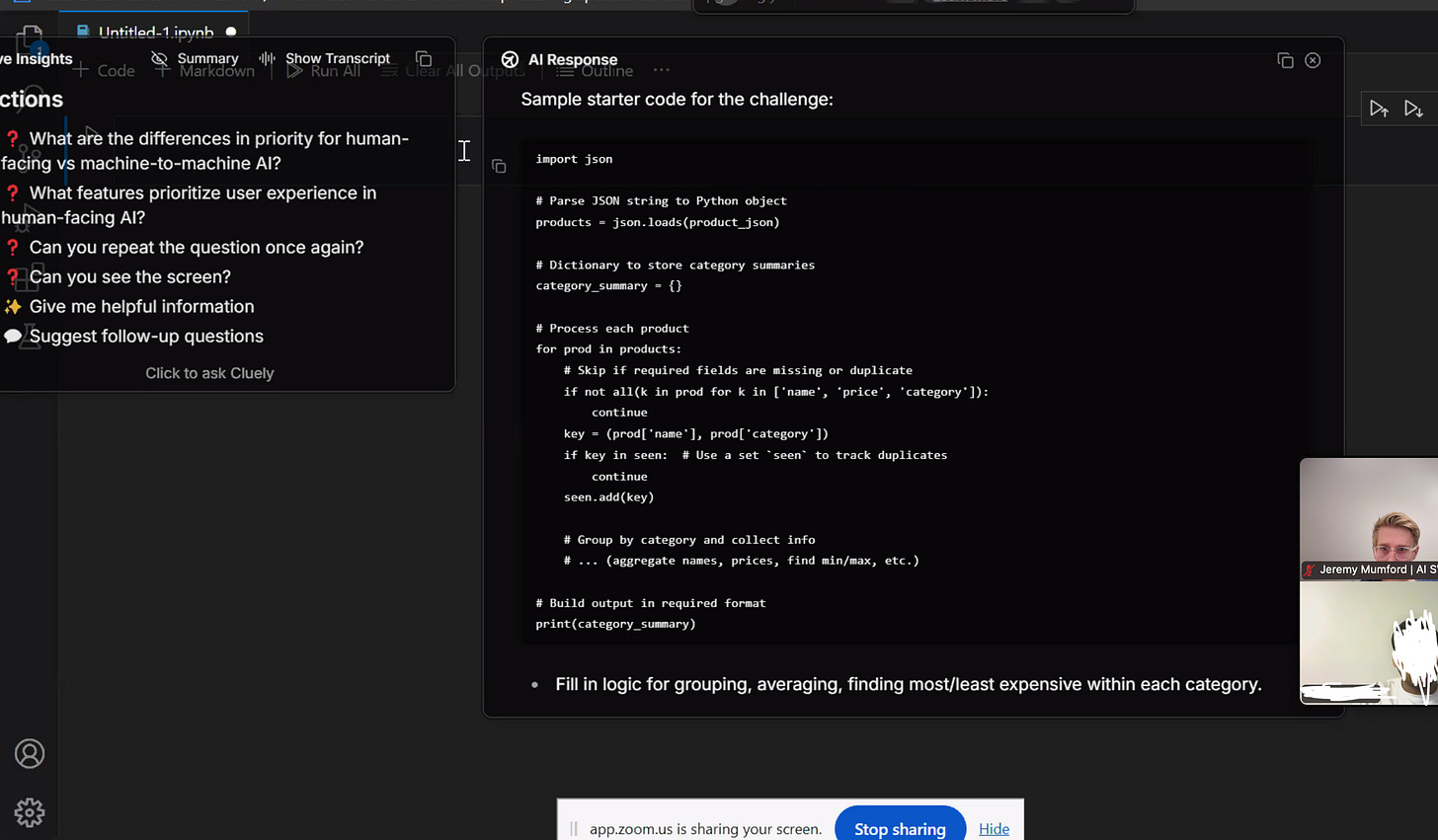

This particular candidate read through the rules and problem description, then began sharing his screen. I immediately noticed an odd overlap on top of his screen. The candidate would drag it in and out of view to check what it said. After looking at it a few times, my suspicions were confirmed.

This candidate was using Cluely.

Cluely is a product built by a Columbia student who was kicked out of college after he built an AI interview cheating software that evolved into what is now called Cluely. It openly and brazenly proclaims itself as a ‘cheating’ tool for interviews, tests, and more. Their ads are extremely cringy, and both the VC backed company and founder have attracted significant controversy.

Cluely is supposed to be hidden during zoom calls. It’s supposed to hide itself from the view of the screenshare. Only for this candidate, he was apparently too dumb or too lazy to figure out how to do that.

“Jeremy, that’s some harsh language. Shouldn’t you support people using AI?” Normally, yes, I’m all for AI. Even as my blood pressure raised, I mentally gave him the benefit of the doubt. “This is exactly the kind of people we are looking for,” I told myself, “People who pro-actively use AI.” I assumed (and I hoped!) that he was using it as an assistant, not as a cheating tool. Yet the app was clearly showing a complete solution to my coding challenge, in direct contradiction of the rules he had just read.

So I confronted him. “What’s with the AI app?” I asked. He immediately froze. “Huh?” He feigned confusion. “The app on your screen with AI,” I nodded towards my computer. He made a big show of rotating the view through his different virtual desktops, not realizing that his AI cheating tool was clearly visible. “I…. I’m not using any AI tools!” (I’m cleaning up his grammar, his embarrassed mumbling was not that clear.)

I became angry. Angrier than I’ve been in a long time. “The rules of this coding challenge were clear. You cannot dump the problem into AI. This interview is over. Have a nice day.” I immediately ended the call, fuming. My biggest pet peeve by far is lying. I find it absolutely despicable. Passing off the work of either other humans or AI as your own work is unacceptable in my playbook. If he had owned up to using the AI my reaction would have been very different. Instead, our call ended 25 minutes early and turned into a 9 page rant that you see in front of you.

THOUGHTS IN THE AFTERMATH

A few interesting thoughts came to my mind as I grumbled and stewed over this frustrating experience. I had found myself a victim of the exact thing I had published a paper on in college: the inability of humans to detect the deceptive use of AI. I started thinking on how I could connect my research discoveries with my current experience.

My research had found a few interesting things:

Education and being on the lookout improves the ability of younger individuals to detect AI deception.

However, older people had the opposite experience. Constantly looking out for AI deception made them worse at spotting AI generated content.

If I began approaching every interview highly skeptical of candidates, would I get better at spotting cheating? Or would I become some pre-occupied with catching cheaters that I’d miss genuinely good candidates? Or worse, falsely accuse them of using AI.

My college research was built on the assumption that AI deception is a game of cat and mouse. Every time AI detection software is updated, the cheating software evolves and outwits it. Undoubtedly, we should work with our recruiting team to update our video conferencing software to add cheating detections, along with AI reviews for incoming resumes. I’ve also been approached by headhunters and external recruiting agencies who offer to solve my problem (for a price). But no matter how many softwares or services I use, I fundamentally believe you cannot fully immunize yourself from cheaters when interviewing remotely.

That’s why part of me wants to just put the hammer down and ban remote interviews. It would simplify the interviewing process. Interviewing candidates in person for onsite roles is so valuable. Much is lost over a two dimensional, half second delayed, head and shoulders view of an individual. Biggest of all, you can’t cheat in person. (At least not as easily. The majority of in person cheating is someone taking lots of bathroom breaks to check their phone.) Luckily, I’m done hiring for the next little bit. I’m happy I won’t have to face this problem again for a while. Interviewing candidates in person comes with it’s own set of challenges. If you can’t find someone locally that fits the role, you have to fly people in. Hopefully my next company is like Nvidia and has cash to burn on plane tickets!

The other thought I had was that the candidate was still terrible. Even with the AI. Or maybe because of it? I’m not sure. But he was not good. The Cluely subreddit r/cluely is filled with questions from users who clearly have no idea what is going on. This subredditor posted this minutes after my call with the cheater ended (in case you are wondering if it’s the same person, I doubt it. We use zoom, not teams):

I was caught using cluely in interview, not sure I've used browser teams call but the interviewee caught me using cluely

Grammar? Out the window. Are they asking for help? Not sure. Are they complaining about the software or just confessing their sins? Your guess is as good as mine.

In my head, there are two outcomes with my cheater.

He reforms from his cheating ways.

He figures out how to turn on the basic settings that actually hides the dang app. Or at least keeps it on another screen.

While I would have rejected him even if I hadn’t seen him blatantly using AI to cheat, I’m disturbed by the fact that I likely would not have realized he was cheating had he used two of his brain cells to configure the cheating software properly. It’s completely altered my worldview. Not to be dramatic, but seriously, what is real nowadays? I suspect that the value of in person conferences, in person interviews, and in person interactions will skyrocket in value. The flood of AI slop drowning our inboxes, our social media feeds, and now our interviews threatens to destroy our trust in the digital world. Private community chatrooms and servers are seeing a huge resurgence that is reminiscent of the early days of the internet, except now they are powered by advanced software applications like Discord and Slack.

Bottom line: The unethical use of AI should never be tolerated. Misrepresenting the work of either other humans or AI as your own is unacceptable. Always be transparent in your actions and authentic in your personality. When hiring candidates, look for those that can both use AI and understand the code that is generated.

This group of Columbia students might restore you faith in humanity a bit: https://www.linkedin.com/posts/patrick-shen_3-months-ago-roy-lee-dropped-out-of-columbia-ugcPost-7348799998374924288-nLD-

On another note, what do you think about a candidate describing the solution in precise detail to the AI? While it would one-shot the problem, one can evaluate how much thought exists in the head.

I’m a startup lawyer and don’t have a dog in this fight other than curiosity so this is not a challenge, but how do you know he used Cluely vs some other cheating software?